Table Of Content

Recap

Profiling

Our Trading Rule: The Moving Average Crossover

Exponential Moving Averages

Pulling Data From Our DB

Installing Dependencies

The Python Psycopg2 Connector

Intermediate Step - Cleaning Data

Filling vs. Dropping Missing Data

Handling Duplication

Calculating The EMA

Plotting the EMAs

Calculating EMA Crossover

How To Trade It - Little Roadmap

Recap

Last week we had a look at the most commone database index, the B-tree, and how using it speeds up pulling data out of our datahub. By looking at the execution plan the PostgreSQL query planner came up with, we found that we were utilizing a SEQ SCAN to search through all entries one by one when filtering the symbol column because it was missing an index and fixed that.

This workflow is called profiling.

Profiling

Profiling helps us identify what parts of our system are slow, so called bottlenecks, and also find out why they're slow so we can work on them to improve the systems peformance. Any measurable resource can be profiled, not just our database. We didn't really code all that much yet but we can apply similar techniques to our code, measuring CPU time, memory usage, network bandwidth, disk I/O, etc.

The really nice thing about profiling is its ability to pinpoint bottlenecks with great accuracy. A lot of developers tend to rely on their intuition when optimizing performance. I don't know if that's an ego thing or not but even with over a decade of corporate coding experience, I still resort to profiling first. I don't want to end up "fixing" something I thought was the problem but then turns out to not be related at all, leaving me no other choice as to roll back and start over. (Might also be a skill issue, idk). I'm quite lazy so I want to put in the least amount of work possible to get the job done. Profiling helps me to keep my workflow lean and clean (other people like to call this efficient).

While optimizing PostgreSQL SELECTS is fairly easy, profiling python code is somewhat more complex. There are more sources of potential bottlenecks and depending on the situation we need to apply different techniques like reducing overhead or writing more suitable algorithms depending on the task at hand. We probably need to dedicate at least one full issue to this so the conversation is meaningful and relevant. Nontheless we're going to try and steadily incorporate more and more profiling approaches to our application as we go.

Let's circle back to our research lab for now though!

Our Trading Rule: The Moving Average Crossover

If we pull up the current version of our Trading Strategy, we can see that we decided to rely on the Moving Average Crossover as a trading rule to measure price momentum for our trading decisions. As a quick refresher: each time the slower Moving Average crosses over the faster Moving Average, we anticipate price rises and want to be long (and vice versa for short).

Exponential Moving Averages

The concept of the moving average and its crossovers in itself is a fine measure for momentum strength but we actually can do better than using just a simple moving average!

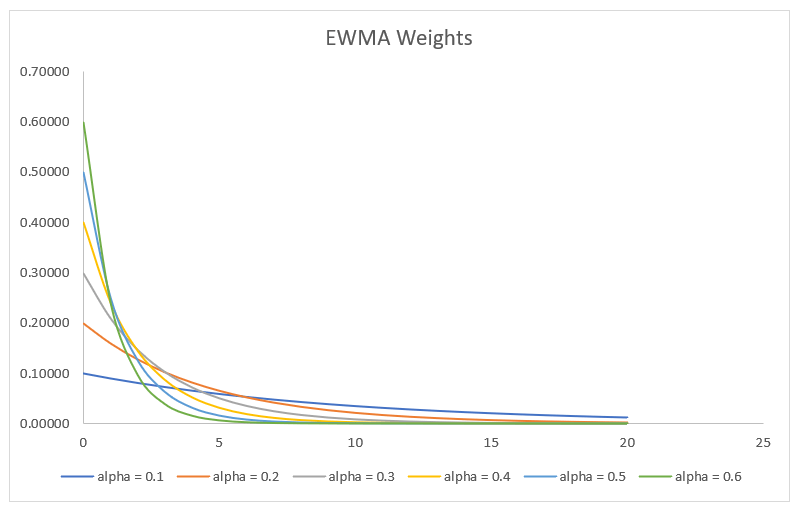

The Exponential Moving Average - short EMA or EWMA - is a type of moving average that gives more weight to recent prices by exponentially decaying old data - hence the name EMA - which makes it more responsive to newer information. Due to its weighting, the natural lag effect gets reduced considerably compared to simple moving averages. While older information is still statistically relevant, it is newer data that helps us spot emerging trends and trend shifts more quickly.

Its formula looks like this:

where α describes the weight assigned to the most recent prices on a scale of 0-1.

It gets computed as a recursive function where current observations (t) are calculated based on previous observations (t-1).

While α also holds the information how many data points are going to be used for EMAt, it's rather counter-intuitive to set its value directly. Usually we specify some kind of lookback to build our moving averages like MA(8) or MA(32). Luckily, since lookback (n) and α are related like that, we can also solve it the other way around:

where n is the number of days we want to use for the lookback.

Pulling Data From Our DB

Let's create our 2 moving averages, EMA(8) and EMA(32). For this we need some historical price data first. We're going to use the python library psycopg2 as PostgreSQL adapter to connect to our db and execute the query

SELECT ohlcv.time_close, ohlcv.close

FROM ohlcv

JOIN coins ON ohlcv.coin_id = coins.id

WHERE coins.symbol = 'BTC';

After that we'll load the data into a pandas dataframe and use its ewm()function to calculate our EMAs.

Installing Dependencies

First we need to install the following dependencies using pip:

pip install pandas psycopg2 python-dotenv matplotlib

or just run pip install -r requirements.txt from this weeks GitHub repository.

This step is needed because our python script is running locally and not within the docker container of the datahub. In the future we'll tug our logic away behind a nice GUI, which will also run dockerized, but for now we're going to execute manually on our machine.

pip will probably squack at you with something along the lines of Error: pg_config executable not found. and give "useful" tips like please install the PyPI 'psycopg2-binary' package instead. This is because we did not install PostgreSQL locally yet. Our database is running in its own docker container, which installed everything needed to run the PostgreSQL instance inside of the container but not our system.

If you encounter the above error, give your system a good ol apt install libpq-dev (for Ubuntu/Debian based OS) and rerun the pip install -r requirements.txt command.

The Python Psycopg2 Connector

After installing all needed dependencies, we're almost ready to run our python script. There's just one step we need to complete first. Since we're using the python library dotenv to read the credentials for authentication with our database from our .env file but didn't specify its HOST yet, we need to add it in there:

DB_USER=postgres

DB_PW=password

DB_DB=postgres

DB_PORT=5432

DB_HOST=localhost

Now we can run our script and print out the data we're getting:

import pandas as pd

import psycopg2

from dotenv import load_dotenv

import os

load_dotenv()

conn = psycopg2.connect(

dbname=os.environ.get("DB_DB"),

user=os.environ.get("DB_USER"),

password=os.environ.get("DB_PW"),

host=os.environ.get("DB_HOST"),

port=os.environ.get("DB_PORT")

)

cur = conn.cursor()

cur.execute("""

SELECT ohlcv.time_close, ohlcv.close

FROM ohlcv

JOIN coins ON ohlcv.coin_id = coins.id

WHERE coins.symbol = 'BTC';

""")

rows = cur.fetchall()

cur.close()

conn.close()

df = pd.DataFrame(rows, columns=['time_close', 'close'])

print(df.tail())

# time_close close

# 6014 2024-12-04 23:59:59 0.000552

# 6015 2024-12-05 23:59:59 0.000993

# 6016 2024-12-06 23:59:59 0.001550

# 6017 2024-12-07 23:59:59 0.001395

# 6018 2024-12-08 23:59:59 0.001103

Notice anything weird?

According to our pandas dataframe, BTCs price on the 08th of December 2024 was $0.001103! How does this make sense?

TLDR: It doesn't!

Let's check our coins table in dbeaver

SELECT * FROM coins

WHERE symbol='BTC'

There seems to be multiple different coins using BTC as symbol. We definitely need to do something about that. Symbols should be unique! For now let's just rename the symbols that don't represent the real Bitcoin and jot down that we need to sanitize our database later.

You can edit the symbols easily in Dbeaver - just like in an excel sheet - by clicking into the fields and hitting Save at the bottom of the panel after you're done.

If we run the python script again, the results should be fixed:

# time_close close

#5257 2024-12-04 23:59:59 98768.527555

#5258 2024-12-05 23:59:59 96593.572272

#5259 2024-12-06 23:59:59 99920.714730

#5260 2024-12-07 23:59:59 99923.336619

#5261 2024-12-08 23:59:59 101236.013140

ALWAYS CHECK THE DATA YOU'RE WORKING WITH!

Intermediate Step - Cleaning Data

We're making progress to our EMA calculation but we're still not quite there yet. Don't fret though, it's just one more intermediate step.

Before doing any kind of data analysis, it's beneficial to clean the data you're working with first. For this example it's kind of overkill but it's still a good habit to think about what you're going to do with the data and than sanitize it properly.

Since we will use pandas ewm() function, which under the hood uses the rolling() expression to traverse through the time series in a sliding window fashion, we need to make sure we have no missing values in the series. Rolling functions usually require all of the values in its window to be non-NA.

Missing data occurs frequently but pandas makes it easy to deal with: its isna() function abstracts most of the cases, like missing values, None-values, etc., away. We can check for them by calling it on the dataframe:

missing_value_rows = df.isna().any(axis=1)

print(df[missing_value_rows])

# Empty DataFrame

# Columns: [time_close, close]

# Index: []

Filling vs. Dropping Missing Data

It looks like our dataframe isn't missing any close prices. If it were, we would have to choose between two options to handle it: option a) using dropna() to remove the rows or option b) using a filling method to insert some values into the empty columns it.

As a rule of thumb, if you have a lot of columns - which we don't have, we only have close prices as a column besides the date index - but only one column lacks values, it's better to fill it to not lose all the values in the other columns of the same rows.

This kind of depends on your data though, so inspecting the rows using isna() first is a good way of being somewhat cautious and digging a little deeper.

Since we don't have any other columns, we could've simply dropped rows with missing close prices.

Handling Duplication

You can end up with duplication in your dataframe for a number of reasons, joining, merging, reshaping to only name a few. Almost all of them don't really apply because we didn't do anything with our data yet.

Dropping duplicates is awfully similar to the missing data workflow: identify with duplicated(), inspect, drop_duplicates() before further processing. It's also a good idea to check if you can pinpoint where the duplication happened and if you can fix that if it's a bug.

Calculating The EMA

We're finally ready to calculate our EMAs!

Pandas documentation provides us with the details for its ewm() function, which luckily has the paramter span in it. It works just like your usual moving average lookback and calculates the needed decay factor α for us. To get its actual EMA value, we need to call mean() on its result:

[...]

df = pd.DataFrame(rows, columns=['time_close', 'close'])

df['ema_fast'] = df['close'].ewm(span=8).mean()

df['ema_slow'] = df['close'].ewm(span=32).mean()

print(df.tail())

# time_close close ema_fast ema_slow

# 5257 2024-12-04 23:59:59 98768.527555 96596.377356 89442.172271

# 5258 2024-12-05 23:59:59 96593.572272 96595.754004 89875.590453

# 5259 2024-12-06 23:59:59 99920.714730 97334.634166 90484.385863

# 5260 2024-12-07 23:59:59 99923.336619 97909.901378 91056.443485

# 5261 2024-12-08 23:59:59 101236.013140 98649.037325 91673.387100

Plotting the EMAs

Let's plot the close prices and EMAs real quick and compare them with TradingView to confirm we didn't mess something up.

import matplotlib.pyplot as plt

df_plot = df.tail(80)

plt.plot(df_plot['time_close'], df_plot['close'], label='close')

plt.plot(df_plot['time_close'], df_plot['ema_fast'], label='ema_fast')

plt.plot(df_plot['time_close'], df_plot['ema_slow'], label='ema_slow')

plt.legend()

plt.show()

This is what we get

And this is TradingViews version

Looks awfully similar and it's values also check out:

5260 2024-12-07 23:59:59 99923.336619 97909.901378 91056.443485

5261 2024-12-08 23:59:59 101236.013140 98649.037325 91673.387100

TradingView

They deviate a little bit but only 0.03%, which stems from the fact that we didn't source our historical data from Bitstamp like the TradingView chart did.

On to the last part of this article:

Calculating EMA Crossover

Calculating the EMA Crossover value is easy, it's just the difference between the fast and the slow EMA. A positive value indicates a recent price uptrend and a downtrend for negative ones.

df['ema_crossover'] = df['ema_fast'] - df['ema_slow']

print(df.tail())

# time_close close ema_fast ema_slow ema_crossover

# 5257 2024-12-04 23:59:59 98768.527555 96596.377356 89442.172271 7154.205086

# 5258 2024-12-05 23:59:59 96593.572272 96595.754004 89875.590453 6720.163552

# 5259 2024-12-06 23:59:59 99920.714730 97334.634166 90484.385863 6850.248302

# 5260 2024-12-07 23:59:59 99923.336619 97909.901378 91056.443485 6853.457893

# 5261 2024-12-08 23:59:59 101236.013140 98649.037325 91673.387100 6975.650224

How To Trade It - Little Roadmap

The truth is, we don't have a way to trade it yet. Sure, we know our preferred direction based on the EMAC value but there's more to it! Next week we're going to figure out how to transform this into an actual forecast. Since we want to continously stay exposed to the market one way or the other, we need to not only define if we want to buy or sell but also how much we want to put into our position. "Stronger" trend forecasts should lead to bigger positions because we want to bet more on greater opportunities.

After finishing our very first backtest with just one simple trading rule, taking positions according to its forecast on just one single instrument, we're going to add more and more elements to our strategy. More rules, more variations, more instruments. Keeping it very simple at first makes it easier for us to iterate quickly after the base case works.

So long, Happy Trading!

- Hōrōshi バガボンド

Newsletter

Once a week I share Systematic Trading concepts that have worked for me and new ideas I'm exploring.