Table Of Contents

Recap

Turnover Recap

Correcting Trading Fee Calculation

Putting Fees Into Context

Fetching Funding Rates

Paginating Historical Funding Rates

Aggregating & Incorporating Funding

Next Up

Recap

Last issue we improved our backtest by adding yearly turnover calculation to its performance metrics and fixing a few bugs. In todays article we're going to have another look at trading costs, specifically funding fees.

Updating Historical Prices DB

It's been a while since we pulled in new historical prices. We can easily do this by commenting in the scraper part in the docker-compose.yml of our datahub again and then run it with docker-compose up (you might have to use docker-compose up --build depending on your current state of the repo):

services:

db:

image: postgres:latest

environment:

- POSTGRES_USER=${DB_USER}

- POSTGRES_PASSWORD=${DB_PW}

- POSTGRES_DB=${DB_DB}

ports:

- "${DB_PORT}:${DB_PORT}"

volumes:

- postgres_data:/var/lib/postgresql/data

data_scraper:

build:

context: ./data_scraper

dockerfile: Dockerfile

environment:

- DB_USER=${DB_USER}

- DB_PW=${DB_PW}

- DB_DB=${DB_DB}

- DB_PORT=${DB_PORT}

depends_on:

- db

volumes:

postgres_data:

It'll start fetching data right away. This might take up to 12-24h. Don't worry, we're going to fix this soon. Right now, we're still focusing on building out our lab. The data we're working with should mostly be seen as dummy data for the development process. Waiting 12-24h every one or two months is fine in my opinion.

We obviously get different results when we run our backtest.py now:

# current BTC current_price 78532.0018084677

# contract value 78532.0018084677

# Strategy Total Return 969.7486237812502

# Strategy Avg. Annual Return 66.49600745447235

# Strategy Daily Volatility 1.7759600711002572

# Strategy Sharpe Ratio 1.9598188959757952

# Fees paid 4135.925321806874

# Instr Daily Mean Return 0.003815858387315305

# Instr Daily Volatility 0.031071300570486668

# Instr Ann. Sharpe Ratio 2.346276814584308

However, as you can see, our annual turnover is the same: 33

Turnover Recap

Let's quickly reiterate the meaning of the word turnover. From last weeks article: "Ok, so what does an annual turnover of ~33.23 a year mean? It means we're resetting a typical (average) sized position roughly 33 times a year using the EMAC(8,32) Crossover as our signal."

Some of you contacted us to ask if this is the amount of contracts we're trading each year.

No! If turnover would measure the amount of contracts traded, it would depend on our bankroll. Since we want to use turnover to purely measure for risk-adjusted returns so we can compare across different strategies, trading rules and instruments, we need to decouple our metrics from the amount of money in our account.

A turnover of 33 does not imply that we're trading 33 contracts each year but that how often we trade our average position size by comparing the amount of contracts traded with our strategies average traded. So in our example we're trading our average position of ~0.0376 contracts 33 times a year.

Correcting Trading Fee Calculation

Right now, the fees we pay get calculated using our full notional exposure every day:

[...]

# ByBit fees

# fee = 0.001 #spot

fee = 0.0055 # perp & futures

[...]

for index, row in df.iterrows():

[...]

notional_per_contract = (row['close'] * 1 * contract_unit)

df.at[index, 'notional_per_contract'] = notional_per_contract

daily_usd_vol = notional_per_contract * row['instr_perc_returns_vol']

df.at[index, 'daily_usd_vol'] = daily_usd_vol

units_needed = daily_cash_risk / daily_usd_vol

df.at[index, 'units_needed'] = units_needed

forecast = row['capped_forecast']

pos_size_contracts = units_needed * forecast / 10

df.at[index, 'pos_size_contracts'] = pos_size_contracts

notional_pos = pos_size_contracts * notional_per_contract

df.at[index, 'notional_pos'] = notional_pos

daily_fees = notional_pos * fee

df.at[index, 'daily_fees'] = daily_fees

This is wrong! In reality we only need to pay our notional traded that makes up our full position each day, not our entire position. So if we have 11 contracts, and we buy 1 contract, we don't need to pay for 12 contracts but only the 1 added contract.

[...]

# ByBit fees

# fee = 0.001 #spot

fee = 0.0055 # perp & futures

[...]

annual_cash_risk_target = trading_capital * annual_perc_risk_target

daily_cash_risk = annual_cash_risk_target / np.sqrt(trading_days_in_year)

for index, row in df.iterrows():

notional_per_contract = (row['close'] * 1 * contract_unit)

df.at[index, 'notional_per_contract'] = notional_per_contract

daily_usd_vol = notional_per_contract * row['instr_perc_returns_vol']

df.at[index, 'daily_usd_vol'] = daily_usd_vol

units_needed = daily_cash_risk / daily_usd_vol

df.at[index, 'units_needed'] = units_needed

forecast = row['capped_forecast']

pos_size_contracts = units_needed * forecast / 10

df.at[index, 'pos_size_contracts'] = pos_size_contracts

notional_pos = pos_size_contracts * notional_per_contract

df.at[index, 'notional_pos'] = notional_pos

if df.index.get_loc(index) > 0:

prev_idx = df.index[df.index.get_loc(index) - 1]

prev_contracts = df.at[prev_idx, 'pos_size_contracts']

df.at[index, 'prev_contracts'] = prev_contracts

if np.isnan(prev_contracts):

prev_contracts = 0

contract_diff = (pos_size_contracts - prev_contracts)

df.at[index, 'contract_diff'] = contract_diff

notional_traded = contract_diff * notional_per_contract

df.at[index, 'notional_traded'] = notional_traded

daily_usd_fees = abs(notional_traded) * fee

else:

daily_usd_fees = 0

df.at[index, 'daily_usd_fees'] = daily_usd_fees

Note that this code is really ugly! This is fine though. As soon as we finish the first iteration of our real backtest, we're going to add a testsuite around our code. This will enable us to safely make changes without breaking things so we can switch out current implementations for more prettier and performant versions.

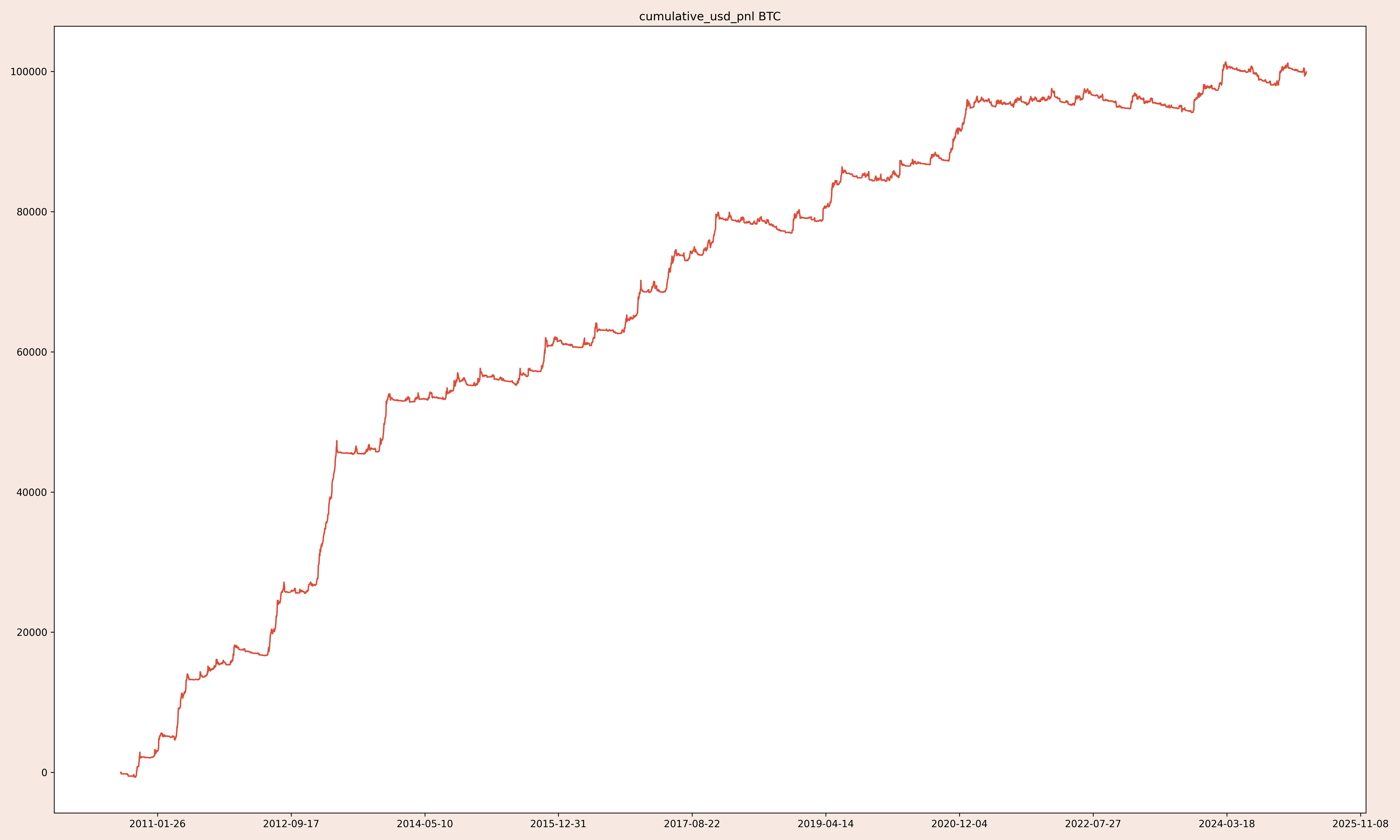

How does this change things? Let's compare the EQ curves of both, the previous and our current implementation:

# current BTC current_price 78532.0018084677

# contract value 78532.0018084677

# Strategy Total Return 969.7486237812502

# Strategy Avg. Annual Return 66.49600745447235

# Strategy Daily Volatility 1.7759600711002572

# Strategy Sharpe Ratio 1.9598188959757952

# Fees paid 4135.925321806874

# Instr Daily Mean Return 0.003815858387315305

# Instr Daily Volatility 0.031071300570486668

# Instr Ann. Sharpe Ratio 2.346276814584308

And here's our current one:

# current BTC current_price 78532.0018084677

# contract value 78532.0018084677

# Strategy Total Return 999.2544727007391

# Strategy Avg. Annual Return 68.50636411265398

# Strategy Daily Volatility 1.7802102822831063

# Strategy Sharpe Ratio 2.0142491133267324

# Fees paid 1185.3404298579717

# Instr Daily Mean Return 0.003815858387315305

# Instr Daily Volatility 0.031071300570486668

# Instr Ann. Sharpe Ratio 2.346276814584308

Doesn't look that much different, does it? That's because in the grand scheme of things, it really doesn't make that much of a difference. At least not that much that you could spot it easily on a chart:

# Previous Implementation

[...]

print('Strategy Sharpe Ratio', strat_sr.iloc[-1], '\n')

print('Fees paid', df['daily_usd_fees'].sum(), '\n')

# Strategy Sharpe Ratio 1.9598188959757952

# Fees paid 4135.925321806874

# Current Implementation

[...]

print('Strategy Sharpe Ratio', strat_sr.iloc[-1], '\n')

print('Fees paid', df['daily_usd_fees'].sum(), '\n')

# Strategy Sharpe Ratio 2.0142491133267324

# Fees paid 1185.3404298579717

As you can see, we got our costs down from $4,135 to $1,185 which equates to an Sharpe Ratio increase of ~0.054 or about ~2.7%.

Putting Fees Into Context

Now is $1,185 - or $4,135 for that matter - a lot or a little? At what point would we consider the fees paid to be too expensive too trade?

To answer this question, we need to put the costs of trading into context again. Just as we did with our returns, we can adjust our costs by the instruments annualized standard deviation.

There's a really easy calculation we can use to calculate our current annualized trading costs in risk-adjusted terms. We can simply substract our post-cost SR from our pre-cost SR! We already have these values because we calculated them earlier for our performance report as strat_raw_usd_returns and strat_usd_returns (this is with fees deducted).

[...]

strat_raw_usd_std_dev_pre = strat_raw_usd_returns.ewm(35, min_periods=10).std()

strat_raw_usd_std_dev_post = strat_usd_returns.ewm(35, min_periods=10).std()

rolling_pre_cost_sr = np.sqrt(trading_days_in_year) * (strat_raw_usd_returns.mean() / strat_raw_usd_std_dev_pre)

rolling_post_cost_sr = np.sqrt(trading_days_in_year) * (strat_usd_returns.mean() / strat_raw_usd_std_dev_post)

strat_rolling_trading_costs_sr = rolling_pre_cost_sr - rolling_post_cost_sr

print('Pre-Cost SR', rolling_pre_cost_sr.iloc[-1])

print('Post-Cost SR', rolling_post_cost_sr.iloc[-1])

print('Risk-Adjusted Costs', strat_rolling_trading_costs_sr.iloc[-1])

# Pre-Cost SR 2.043151770812106

# Post-Cost SR 2.014249113326733

# Risk-Adjusted Costs 0.02890265748537324

We can also plot this:

[...]

def plot_ann_rolling_trading_costs_sr(rolling_sr):

_, ax = plt.subplots(figsize=(20, 12), facecolor='#f7e9e1')

ax.plot(rolling_sr.index, rolling_sr, color='#de4d39')

ax.set_title(f'Ann. Rolling Trading Costs SR {symbolname}')

ax.xaxis.set_major_locator(ticker.MaxNLocator(nbins=10))

plt.tight_layout()

plt.savefig(f'{symbolname}_rolling_trading_costs_sr.png', dpi=300)

[...]

plot_ann_rolling_trading_costs_sr(strat_rolling_trading_costs_sr)

There are other ways to measure and estimate trading costs going forward but this works as a quick and easy approximation for now.

Fetching Funding Rates

We now got a nice little framework to incorporate trading fees paid into our performance. Now with crypto perps, transaction fees aren't the only ones you need to pay. You're also going to pay something called funding. Depending on the exchange you're trading on, this might get calculated and deducted from your account once every 8 or 24 hours.

To reiterate what funding is all about, an excerpt from our first backtest article:

"When the index price is above your contracts mark price, funding is negative and longs get paid. If it's the other way around - index < mark - funding is positive and shorts get paid. The wider the difference between index and mark, the higher the funding.

I like to think of funding as a mechanism the exchange uses to create incentive for opening positions into the index direction. This additional pressure then should help tighten the spread between mark and index again."

To calculate how much funding we need to pay or going to get paid, we need to fetch the funding rates for the instrument traded, in this case the BTC perp. Funding is different for each exchange we trade on so we need to consult the API of our trading exchange Bybit for the data using their API documentation:

import requests

import json

def fetch_historical_funding_rates(symbol):

print('fetching historical funding rates for symbol', symbol)

url = f'https://api.bybit.com/v5/market/funding/history'

params = {

'symbol': symbol,

'category': 'linear',

}

try:

response = requests.get(url, params=params)

response.raise_for_status()

return response.json()

except requests.exceptions.RequestException as e:

print(e)

symbol = "BTC"

funding_rates = fetch_historical_funding_rates(symbol)

print(json.dumps(funding_rates, indent=4))

print(len(funding_rates['result']['list']))

This little script is going to try and fetch the historical funding rates for the symbol specified and return them to you. We put a try/except block around the call to the API and then raise_for_status() when we hit a 4xx or 5xx route. If we encounter any issues, we catch this exception and print out the error message the API provided so we can debug them. Right now we don't see any errors on that level though, we just don't get any data:

# fetching historical funding rates for symbol BTC

# {

# "retCode": 10001,

# "retMsg": "params error: Symbol Invalid",

# "result": {

# "category": "",

# "list": []

# },

# "retExtInfo": {},

# "time": 1741867474384

#}

If we change the url to something we know isn't available, we'd get something different:

[...]

url = f'https://api.bybit.com/v5/does_not_exist'

[...]

# fetching historical funding rates for symbol BTC

# 404 Client Error: Not Found for url: https://api-testnet.bybit.com/v5/does_not_exist?category=linear&symbol=BTC

# null

Wrapping your API calls this way is a common technique to make your application "crash" more gracefully if something goes wrong. You don't want to be in a situation where you start your script, leave your desk for hours, and then come back to see it crashed 2 minutes in and hasn't actually been putting in work while you were away. This is especially important for execution later on so building these things up gradually by tinkering with simple fetch calls is a nice way to better prepare yourself.

Back to the real issue though: the empty response for the correct funding history endpoint. It looks like the Bybit API can't find a symbol named BTC. That's because Bybit doesn't have a ticker named BTC. If we consult their typical symbol structure we can see that the symbol for the BTC USDT perpetual is BTCUSDT. We have to team this up with the correct category from here. Stubbing that in yields us funding rates:

# [...]

# {

# "symbol": "BTCUSDT",

# "fundingRate": "0.0001",

# "fundingRateTimestamp": "1736150400000"

# },

# {

# "symbol": "BTCUSDT",

# "fundingRate": "0.0001",

# "fundingRateTimestamp": "1736121600000"

# }

# ]

# },

# "retExtInfo": {},

# "time": 1741874424299

#}

# 200

Paginating Historical Funding Rates

We only get 200 historical funding rate rows though. That's the Bybit APIs limit. To be able to fetch all historical funding rates, we need to paginate the results ourselves. Not really the results but how we fetch them from the API. For this we're going to start far back in the past - like 1st of January 2011 - and work our way forwards. We need to specify our start_time and end_time to the API in milisecond epoch timestamps. Since every 8 hours a new funding rate row gets created, we need to do some math to calculate the correct timestamps to loop through.

import requests

import json

import time

def fetch_historical_funding_rates(symbol, start_time, end_time):

print('fetching historical funding rates for', symbol, start_time, end_time)

url = 'https://api.bybit.com/v5/market/funding/history'

params = {

'symbol': symbol,

'category': 'inverse',

'startTime': start_time,

'endTime': end_time,

}

try:

response = requests.get(url, params=params)

response.raise_for_status()

return response.json()

except requests.exceptions.RequestException as e:

try:

error_message = response.json().get('ret_msg', 'Unknown error')

except (json.JSONDecodeError, AttributeError):

error_message = str(e)

raise Exception(f"API request failed: {error_message}")

def fetch_all_funding_rates(symbol, start_time):

all_funding_rates = []

current_time = int(time.time()) * 1000 # Current time in milliseconds

end_time = start_time + (66 * 24 * 60 * 60 * 1000) # 66 days in milliseconds

while start_time < current_time:

if end_time > current_time:

end_time = current_time

funding_rates = fetch_historical_funding_rates(symbol, start_time, end_time)

if 'result' in funding_rates and 'list' in funding_rates['result']:

rates = funding_rates['result']['list']

all_funding_rates.extend(rates)

start_time = end_time

end_time = start_time + (66 * 24 * 60 * 60 * 1000)

else:

print("could not find funding rates in response")

return all_funding_rates

symbol = "BTCUSDT"

start_time = int(time.mktime(time.strptime('2011-01-01', '%Y-%m-%d'))) * 1000 # Convert to milliseconds

all_funding_rates = fetch_all_funding_rates(symbol, start_time)

print(json.dumps(all_funding_rates, indent=4))

print(f"Fetched {len(all_funding_rates)} funding rates")

# [...]

# fetching historical funding rates for BTCUSDT 1681599600000 1687302000000

# fetching historical funding rates for BTCUSDT 1687302000000 1693004400000

# fetching historical funding rates for BTCUSDT 1693004400000 1698706800000

# fetching historical funding rates for BTCUSDT 1698706800000 1704409200000

# fetching historical funding rates for BTCUSDT 1704409200000 1710111600000

# fetching historical funding rates for BTCUSDT 1710111600000 1715814000000

# fetching historical funding rates for BTCUSDT 1715814000000 1721516400000

# fetching historical funding rates for BTCUSDT 1721516400000 1727218800000

# fetching historical funding rates for BTCUSDT 1727218800000 1732921200000

# fetching historical funding rates for BTCUSDT 1732921200000 1738623600000

# fetching historical funding rates for BTCUSDT 1738623600000 1741941652000

# [...]

# "fundingRateTimestamp": "1738713600000"

# },

# {

# "symbol": "BTCUSDT",

# "fundingRate": "0.00007932",

# "fundingRateTimestamp": "1738684800000"

# },

# {

# "symbol": "BTCUSDT",

# "fundingRate": "0.0001",

# "fundingRateTimestamp": "1738656000000"

# },

# {

# "symbol": "BTCUSDT",

# "fundingRate": "-0.00001274",

# "fundingRateTimestamp": "1738627200000"

# }

# ]

# Fetched 5445 funding rates

Great! 5,445 are an awful lot more than 200! For now we're just going to persist them by loading the funding rates into a pandas dataframe and then using its to_csv() method.

import pandas as pd

df = pd.DataFrame(all_funding_rates)

df['time_close'] = pd.to_datetime(pd.to_numeric(df['fundingRateTimestamp']), unit='ms')

if not pd.api.types.is_datetime64_any_dtype(df['time_close']):

df['time_close'] = pd.to_datetime(df['time_close'])

df.set_index('time_close', inplace=True)

df.sort_values(by='time_close', inplace=True)

df.to_csv('BTC_funding_rates.csv')

Aggregating & Incorporating Funding

We can now use this data in our backtest by using pandas read_csv() and merging it with our current dataframe after resampling the funding rates to 1D. We have to resample because the funding dataframes index uses 8h intervals but our strategies dataframe index is on 1D. To be sure the funding rates get aggregated as daily values, we use the sum() function on the resample() object:

funding = pd.read_csv('BTC_funding_rates.csv')

funding['time_close'] = pd.to_datetime(funding['time_close'])

if not pd.api.types.is_datetime64_any_dtype(funding['time_close']):

funding['time_close'] = pd.to_datetime(funding['time_close'])

funding.set_index('time_close', inplace=True)

funding.sort_values(by='time_close', inplace=True)

funding = funding.resample(resample_period).sum()

print(funding)

# symbol fundingRate fundingRateTimestamp

# time_close

# 2020-03-25 BTCUSDT 0.000100 1585152000000

# 2020-03-26 BTCUSDTBTCUSDTBTCUSDT 0.000300 4755628800000

# 2020-03-27 BTCUSDTBTCUSDTBTCUSDT 0.000300 4755888000000

# 2020-03-28 BTCUSDTBTCUSDTBTCUSDT 0.000300 4756147200000

# 2020-03-29 BTCUSDTBTCUSDTBTCUSDT 0.000300 4756406400000

# ... ... ... ...

# 2025-03-10 BTCUSDTBTCUSDTBTCUSDT -0.000448 5224780800000

# 2025-03-11 BTCUSDTBTCUSDTBTCUSDT 0.000061 5225040000000

# 2025-03-12 BTCUSDTBTCUSDTBTCUSDT 0.000215 5225299200000

# 2025-03-13 BTCUSDTBTCUSDTBTCUSDT 0.000127 5225558400000

# 2025-03-14 BTCUSDTBTCUSDT -0.000114 3483849600000

If you think this looks weird, you are 100% correct. We did not only sum up the fundingRate column but all columns of the dataframe. To only sum up the fundingRate we have to use the agg() function instead of the sum() function and provide a dictionary in which we specify the aggregation function for each column:

[...]

funding = funding.resample(resample_period).agg({

'symbol': 'last',

'fundingRate': 'sum',

'fundingRateTimestamp': 'last'

})

print(funding)

# symbol fundingRate fundingRateTimestamp

# time_close

# 2020-03-25 BTCUSDT 0.000100 1585152000000

# 2020-03-26 BTCUSDT 0.000300 1585238400000

# 2020-03-27 BTCUSDT 0.000300 1585324800000

# 2020-03-28 BTCUSDT 0.000300 1585411200000

# 2020-03-29 BTCUSDT 0.000300 1585497600000

# ... ... ... ...

# 2025-03-10 BTCUSDT -0.000448 1741622400000

# 2025-03-11 BTCUSDT 0.000061 1741708800000

# 2025-03-12 BTCUSDT 0.000215 1741795200000

# 2025-03-13 BTCUSDT 0.000127 1741881600000

# 2025-03-14 BTCUSDT -0.000114 1741939200000

You could also just extract the 2 columns time_close and fundingRate since we're not really interested in anything else. We prefer option 2, extracting, just because it's easier to understand and works in this case. Note that the time_close column doesn't have to be specified while extracting. It gets extracted automatically because it is the index.

funding = funding.resample(resample_period).sum()

funding = funding['fundingRate']

print(funding)

# time_close

# 2020-03-25 0.000100

# 2020-03-26 0.000300

# 2020-03-27 0.000300

# 2020-03-28 0.000300

# 2020-03-29 0.000300

# ...

# 2025-03-10 -0.000448

# 2025-03-11 0.000061

# 2025-03-12 0.000215

# 2025-03-13 0.000127

# 2025-03-14 -0.000114

We don't need to convert these figures - like we did with the transaction fees - because they are already decimals:

# symbol fundingRate fundingRateTimestamp

# time_close

# [...]

# 2025-03-13 00:00:00 BTCUSDT 0.000055 1741824000000

# 2025-03-13 08:00:00 BTCUSDT 0.000085 1741852800000

# 2025-03-13 16:00:00 BTCUSDT -0.000013 1741881600000

# 2025-03-14 00:00:00 BTCUSDT -0.000159 1741910400000

# [...]

The only thing left to do now is to deduct the funding from our PNL based on our position size and direction. One key thing to note is that we have to handle missing funding rates. Our historical EOD prices go back to 2011 but the funding rates we just scraped from Bybit only reach back until 2020. For now we're going to fillna(0) them. In the future we'll build upon this and change the implementation to some kind of moving average or using correlation to backfill them in correlated batches to get a better approximation.

[...]

df = df.join(funding, how='left')

df['funding'] = df['funding'].fillna(0)

df['funding_paid'] = df['notional_pos'].shift(1) * df['funding']

strat_usd_returns = df['strat_usd_returns'] - df['funding_paid']

df['strat_usd_returns_after_funding'] = strat_usd_returns

[...]

print('Pre-Cost SR', rolling_pre_cost_sr.iloc[-1])

print('Post-Cost SR', rolling_post_cost_sr.iloc[-1])

print('Risk-Adjusted Costs', strat_rolling_trading_costs_sr.iloc[-1])

# With Transaction Fees Only

# Pre-Cost SR 2.043151770812106

# Post-Cost SR 2.014249113326733

# Risk-Adjusted Costs 0.02890265748537324

# With Transaction Fees & Funding Deducted

# Pre-Cost SR 2.043151770812106

# Post-Cost SR 1.9544492845343906

# Risk-Adjusted Costs 0.08870248627771549

Looking at the numbers, it looks like our costs of trading increased from ~0.029 to ~0.089 SR units, so about 207%. Or if you like to look at it the other way around, our Post-Cost SR decreased from ~2.014 to ~1.954, about 3.18%. Or if we look at it from Pre-Cost to Post-Cost, we decreased our SR from ~2.043 to ~1.954, which is about 4.55%.

This is our perfomance sheet now:

A quick littel side before we wrap this up: in live trading, since funding gets deducted or deposited into our account every X hours, we'd actually need to recalculate our risk and adjust our positions accordingly. However, the current implementation is enough to go forward. We're going to take a look at this and how to scrape more funding data and persist them to our database in a future issue when we have all the pieces of the puzzle in place and hardened them with a testsuite.

Next Up

Let's wrap this up. The full code can be found in this weeks GitHub repository. There's a new file called bybit_api.py with which we pulled in the funding using Bybits API. The code looks more and more like a mess so we probably have to do something about this soon. For this we'll introduce a proper testing suite so we can change our code without breaking its functionaility. We still have a few things on our list first though:

- decreasing turnover via error threshold,

- backfill funding

- adding slippage & bid-ask spread,

We're going to tackle all of these over the last few issues to make our backtest more well-rounded first. Then we're going to dive into coding techniques like testing, etc. to change things up: switch out implementations, incorporate more trading rules, trade more instruments, and then probably improving performance. The more feature we build into our backtest, the more data we need. The more data we work with, the slower it all becomes.

Anyway, this should do it for now. Happy trading!

- Hōrōshi バガボンド

Newsletter

Once a week I share Systematic Trading concepts that have worked for me and new ideas I'm exploring.